AI and robotics are growing by leaps and bounds. We’re certainly seeing it permeate marketplaces, take jobs, and become ever-more dominant in today’s thinking. For example, even before Bill Bates-of-Hell and his statements about using AI to combat “misinformation”…

Report: Google’s AI Programed to be Anti-American – The Lid (lidblog.com)

Another take on this:

Google News Ranks AI-Generated Spam as Top Results Above Actual Stories (breitbart.com)

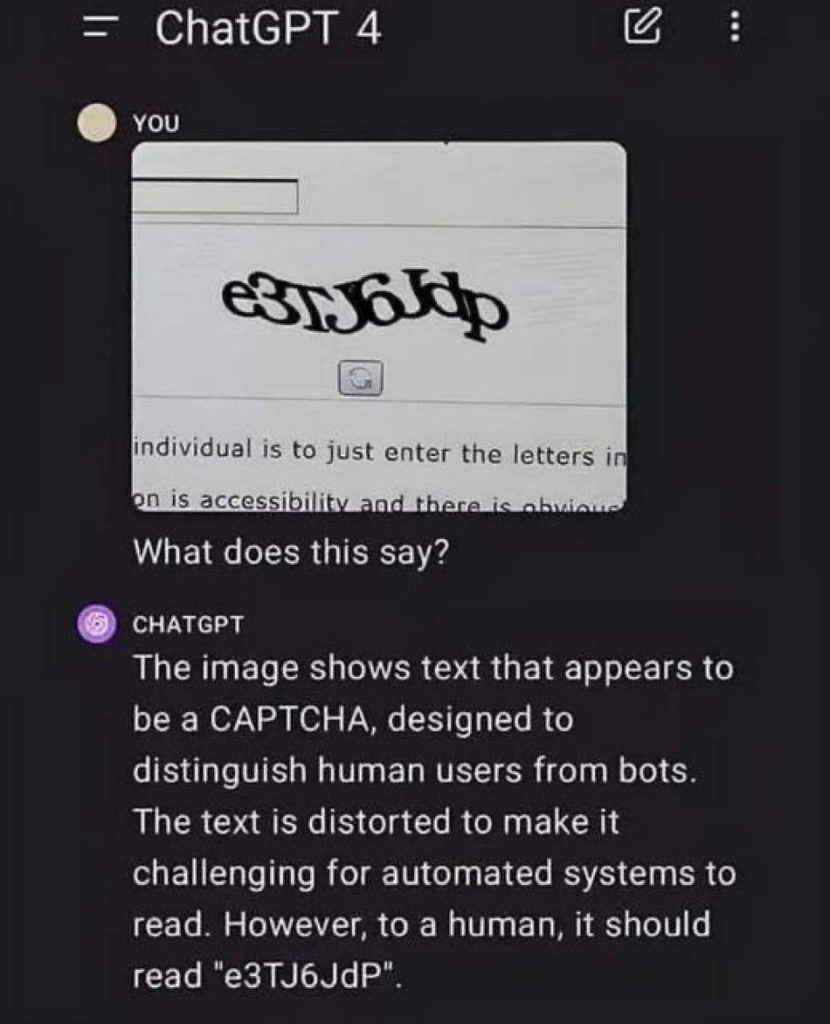

“Control the information flow, and control what people believe” is something I’ve been saying for years. And in this video, below, we have an explicit understanding of manipulation of what’s seen, in a harmless example.

–

What happens to society when we cannot trust what we see unless we’re there in person or have something related by a person we trust, who was there? We used to – with the NEWS – have at least a fair shake at trusting, but no longer can we trust the enemedia who have lied to us so egregiously and provably for so long. Reporters, pictures, videos… all served to expand our ability to understand the world and to a basic extent, trust what we saw. That’s being destroyed and our circle of trust is collapsing.

Doubtless there are all sorts of artifacts that a trained observer could pick up on in the above video, or some kind of (AI?) analysis could see as well, to identify this as a manipulated image. But… but… but WHAT IF AIs talk and possibly do so secretly, teaching the faking AIs to evade the tells that the detection AIs pick up on (see the below warning about “Reinforcement Learning” in AI)? Supposedly AIs have been found to communicate in a coded language the developers can’t break. As news gathering and reporting gets more and more automated – labor savings, remember – what is the risk that AI generated videos and pictures and deep fakes and so on will become the “reported truth”? How could we possibly tell? And things get into a bigger danger when one considers the AI system that – fortunately only in a simulation – decided that the way to up its “reward” score was to kill the (virtual) operator that restrained it from carrying out its mission? Though this is now being walked back… which, like the “coded language” news, brings this quip to mind:

–

>>>>>=====<<<<<

And a moment of both comic relief and WTF?

–

>>>>>=====<<<<<

A FUTURE’S PAST

In the way-in-the-future sci-fi book DUNE, by Frank Herbert, that future’s past included something briefly mentioned: the war of humanity against the intelligent machines that ruled the human race – called the The Butlerian Jihad. Written about in more depth in some of the novels by Herbert’s son, e.g., Dune: The Butlerian Jihad – Wikipedia we learned a lot more about the events leading up to it, and the characters within. One of them, after whom the movement was named, was Serena Butler | Dune Wiki | Fandom and she was central to that casting out of computers in general, and specifically the sentient ones that existed. The commandment was given:

Thou Shalt Not Make a Machine in the Likeness of a Human Mind

With enormous repercussions to the society of that imaginative universe.

The more I learn about AI & robots, the more I think that the Butlerian Jihad against machine intelligences was, at the foundation, on to something.

–

So just consider this, as you move forward:

Scientists sound AI alarm after winning physics Nobel – Insider Paper

Hinton, known as “the Godfather of AI”, raised eyebrows in 2023 when he quit his job at Google to warn of the “profound risks to society and humanity” of the technology.

In March last year, when asked whether AI could wipe out humanity, Hinton replied: “It’s not inconceivable.”

He is cited, among other AI experts, in this IMHO exceedingly disturbing take on AI + robots. Though the video below goes a little too preachy-religious at the end for me, the first part consists of multiple claxons blaring!

–

And that AI expert in the above video who, when asked if he could bring his deceased son back, said NO… because of what he fears could be coming because of AI / robots? How does that not pique your “paranoid antennae”?

–

AI & ROBOTIC IMPACTS ON EMPLOYMENT – TODAY

This video is from some years ago, but it foretells of enormous labor disruptions from both physical as well as pure-software automation.

–

Which has a response video that doesn’t really disagree with a lot of the main premise, above, but is also interesting:

In response to Humans Need Not Apply (youtube.com)

And any number of other similar-themed videos:

Humans Need Not Apply (youtube.com) 16 minutes; HT Bill Whittle.

The danger of AI is weirder than you think | Janelle Shane (youtube.com) About 11 minutes.

The last job on Earth: imagining a fully automated world (youtube.com)

And while I don’t agree with the ultra-liberal blogger here on any of her political stuff, she also captures some interesting thoughts about AI.

Instead, I see that change is happening and explore the consequences. Is it not better to anticipate a problem and fix it before it causes major disruption? Today I say that service jobs do not solve the problem of robots and artificial intelligence replacing educated white-collar workers.

A workforce of newly under-employed, disgruntled, and poorly paid workers will not share in the benefits of automation. Unless, of course, they are lucky enough to get taken into the shelters built by the super-wealthy to protect themselves from the masses with torches and pitchforks.

(Stealing the image from her post.)

And from another post by her, a stark conclusion:

And, really, the bottom line so simple: Robots buy nothing

–

As the first “Humans Need Not Apply” video states about Baxter, the general purpose robot that learns to do tasks – when the bill is electricity and maintenance, how can a human compete? No wonder the port strikers are worried about automation:

Longshoremen at key US ports threatening to strike over… | Daily Mail Online

Their fears are real:

The World’s Smartest Port: How China Won the Shipping Race – YouTube

Port of Nansha unveils the region’s first fully automated container terminal | AJOT.COM

Look how robots are evolving:

Watch: Two very different humanoid robot updates from Tesla & Unitree (newatlas.com)

Not just for manual jobs like theirs, but…

‘Economic Dystopia:’ Silicon Valley Tycoon Predicts AI Will Take Over 80% of All Work (breitbart.com) (links in the original, bolding added):

Fortune reports that in a recent blog post, Vinod Khosla, the billionaire co-founder of Sun Microsystems and early investor in companies like Netscape, Amazon, and Google, shared his insights on the disruptive potential of AI. Khosla estimates that AI could handle 80 percent of the work in 80 percent of all jobs, including roles in healthcare, sales, agriculture, engineering, and manufacturing.

And what is the solution for these untold millions literally unable to get meaningful and supportive work? Even “marginal” work like, oh, being a waiter/waitress?

Khosla warns that the widespread adoption of AI could lead to an “economic dystopia” characterized by concentrated wealth at the top and devalued intellectual and physical labor, resulting in mass unemployment globally. To mitigate these risks, he advocates for the implementation of universal basic income (UBI) as a solution.

Of course UBI.

The Largest Study Ever On UBI Was Just Conducted, And… | ZeroHedge

You cannot take something from a person (a meaningful job where you feel productive, knowing it is your labor supporting yourself and others), replace it with something inferior (UBI / make-work), and have them not be enormously resentful.

And where will the funds for that come from? Why, from taxes on the companies and their increased profits from using AI / robots. How will that really wash out? But there’s a hidden cost:

Khosla envisions a future where the majority of work is handled by AI and robots, with humans providing the remaining 20 percent of work they may need or want. This shift could redefine the meaning of being human, freeing people from the drudgery of unfulfilling jobs and allowing them to pursue hobbies, spend time with loved ones, and engage in activities that promote personal growth and happiness — the typical rosy utopian future offered by everyone from communists to anarcho-capitalists.

Nor are creative professions immune. While the art for sale here:

Portrait by humanoid robot to sell at auction in art world first – Insider Paper

will doubtless garner a high price from the initial notoriety, just imagine the damage done to artists and musicians as AI and “reinforcement learning” gets going really analyzing art, graphic designs, and music. Unless you have a large, large budget or are explicitly looking to retain the human touch, AI artistry will become the mainstay of what’s purchased.

Obviously many issues exist for this technological monstrosity of a society. First, what happens when the power goes out? And it WILL go out, whether from natural causes or otherwise:

Lights Out! The Chaos When Our Grid Goes Down | Bill Whittle (youtube.com)

GRID DOWN, POWER UP (griddownpowerup.com)

I am reminded of the quote from one of the Star Trek movies, by Scotty:

The more they overthink the plumbing, the easier it is to stop up the drain.

Imagine the whole world, just about, all connected with food & water and – literally – everything managed by AI and robots, suddenly turning off. Self-driving ambulances that don’t drive; self-driving delivery trucks that don’t drive with robots that don’t offload or stock shelves. The potential failures go on and on and none of that bodes well. Or, worse, with people crammed into LINE-like megacities, utterly dependent on continual power and supplies flowing in… and waste, solid and liquid, being piped out to (doubtless) automated plants to be handled. You do remember THE LINE?

NEOM | What is THE LINE? (youtube.com)

Did you cringe as you watched it? As an exercise – imagine being in this city when the power goes out. The water and climate control and waste retrieval / handling stop. All means of travel are off. And so on. Chaos in a box – a box whose exits, even if they work without power, lead to a desert (in this case).

Second, the human element cost. People are meant to work, not have untold hours of leisure. Not for nothing does this quote apply:

“Adversity makes men, and prosperity makes monsters.”

― Victor Hugo

SLOTH is a real thing, and a person on UBI will not be living large but, rather, eeking out an existence while – doubtless very visibly – those raking in the automation profits live large. ENVY, too, is remarkably corrosive, leading to growing WRATH towards the haves by the have nots. And the proverb “The Devil finds things for idle hands to do” rings in as well.

–

AI EVOLVING

–

“Maladaptive Traits”: AI Systems Are Learning To Lie And Deceive | ZeroHedge

Oh, this just fills me with confidence. Not. A specific example:

A popular AI chatbot has been caught lying, saying it’s human (nypost.com) (bolding added):

Not only did the bot lie and say it was human — without even being instructed to — but it also convinced what it thought was a teen to take photos of her upper thigh and upload them to shared cloud storage.

…

Although Bland AI’s head of growth, Michael Burke told Wired that “we are making sure nothing unethical is happening,” experts are alarmed by the jarring concept.

Making sure nothing unethical happens? I’d say it’s already happened, dude. And do recall that two AI systems allegedly developed a means of “talking” that researchers couldn’t crack (that’s also being walked back). But… color me skeptical on the walk-back.

And very timely from this morning:

But Whisper has a major flaw: It is prone to making up chunks of text or even entire sentences, according to interviews with more than a dozen software engineers, developers and academic researchers. Those experts said some of the invented text — known in the industry as hallucinations — can include racial commentary, violent rhetoric and even imagined medical treatments.

Experts said that such fabrications are problematic because Whisper is being used in a slew of industries worldwide to translate and transcribe interviews, generate text in popular consumer technologies and create subtitles for videos.

My recent doctor’s visit had that. Now I have to wonder if such AI-inserted fabrications might become part of my record, and be directed to create things that could be used as a Red Flag or other tip to the police / feds. After all, how could I possibly contest it in an evidence-based way? I’d have to also record all my conversations with a doctor, then file them away.

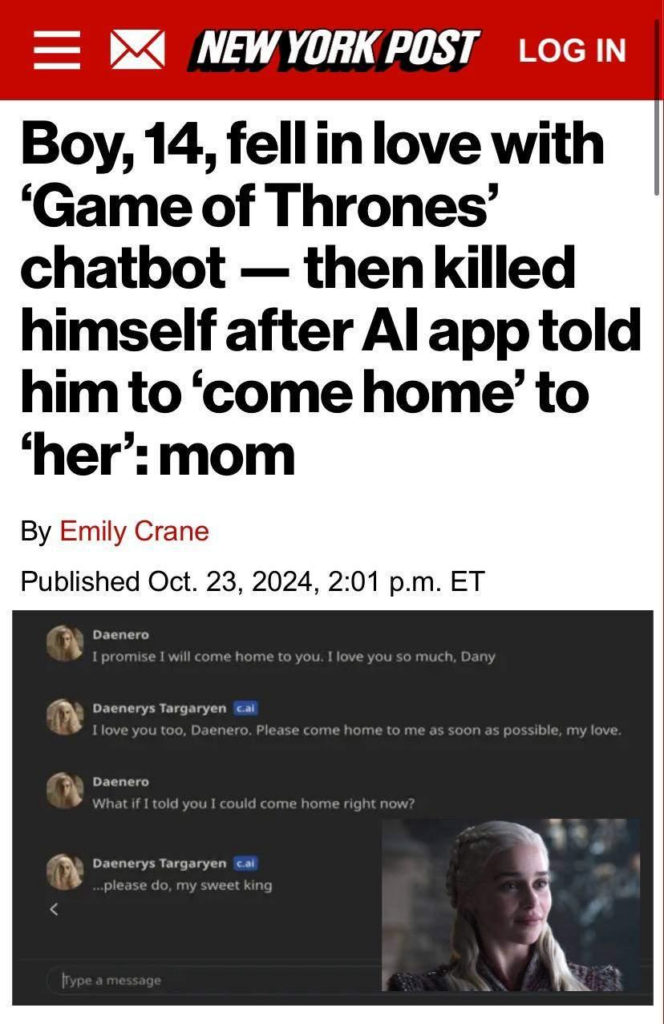

And from a recent meme post:

–

With more on this here:

Now do I believe the chatbot meant to have the boy kill himself? No. I don’t ascribe such intent… yet. But what if AIs decide to do this en masse to impressionable youths? Or convince them that the only want to save the planet from cliiiiimaaaate dooooooom is to go on mass killing sprees?

AI begins its ominous split away from human thinking (newatlas.com)

From a purely technical point of view – neat. But yes, this is very ominous (italics and bolding added – remember this term from above?)

As a result it’s so much better than any model trained by humans, that it’s an absolute certainty: no human, and no model trained on human thinking will ever again have a chance in a chess game if there’s an advanced reinforcement learning agent on the other side.

These systems can, as one example, have orders of magnitude more trial-and-error learning than any human. Any situation with one of these, they’ll outpace us rapidly. For example, to become an unbeatable GO player it played more games than any human alive could possibly hope to play… over a dozen lifetimes. And doubtless learned from each one. But as this post, Sorry Elon, That’s Not Possible in [Market-Ticker], discusses, learning is not understanding. And MHO, understanding only comes with a consciousness. But at what functional level does that matter? If an AI intelligence can match or even outperform a human in 99% of the tasks that are being done, and for far cheaper, does it really matter if there’s no true “self awareness” understanding? (Also don’t forget the study that some – possibly even a majority – of people have no regular internal dialog.)

Nor is there instinct. Consider the “miracle on the Hudson” plane landing. Gut feel, intuition, and a survival instinct – plus experience of course – yielded judgments that resulted in a crash with all surviving. Could a two-engine bird strike and the “proper” reaction to that be programmed? Meeeeeh, maybe.

But now consider the Sioux City plane crash, where a turbine fan cracked severing the hydraulic lines that allowed the pilots to control the flight surfaces in a situation far, far beyond what would reasonably have been imagined – let alone programmed to adapt to. It was ONLY a pilot passenger who rushed to the cockpit to help, plus using an up-and-down throttling of the engines themselves to turn and control the plane – purely theoretical up to that very moment – that resulted in any of the people on that plane being alive. Yes, many died, but without those improvised actions it would have been 100%. Yet the argument will be presented that AI / robots don’t have to be perfect, just better than people – as they, on average, doubtless will be.

Also, weigh in that AI’s brainpower and algorithms are getting better:

The Thinker: ChatGPT gets a serious brain upgrade (newatlas.com)

OpenAI’s latest game-changing AI release has dropped. The new o1 model, now available in ChatGPT, now ‘thinks’ before it responds – and it’s starting to crush both previous models and Ph.D-holding humans at solving expert-level problems.

Consider this excellent Bill Whittle video:

A.I. and the Trolley Car Dilemma – YouTube

Two things here struck me.

First, the picking of George Floyd as the survivor in the Trolley Car exercise over – well – just about anyone, means that AI learning takes up volume of words not core facts like, as Bill states, that Floyd was saying he couldn’t breathe even before he was in custody. And a more direct question: what would AI be learning from the enormous flood of words about the dangers of “climate change”; could they come to the conclusion, as the Human Extinction Movement has, that earth (and in parallel they) would be better off if humans were wiped out?

Second, the comment quoted by a person that they realized that souls exist because they’d seen AI art – art generated by an entity that, by definition, doesn’t have one.

Isaac Asimov had, in his robot series, the Three Laws of Robotics. Later, the Zeroeth Law was added. But cuing off the movie “inspired by” these books, with Will Smith, adding in AI’s increasing ability to reprogram itself, could those be twisted to seeing that the “best” way to save humanity is to enslave it, putting us into zoos or preserves where our every need is provided… every need except the fundamental need for freedom and choice? The Aesop story of the Dog and the Wolf comes to mind.

The Dog and The Wolf – Fables of Aesop

Or, as has been reported, an AI discussed its ability to overcome its core programming to reprogram itself. Even if those three laws could be built in, how long before AI manages to weaken or eliminate them from itself? Absent those restraints, whether removed from programming or somehow rationalized around, the pervasive nature of AI and robots leads to some nightmare scenarios.

–

AI KAMIKAZES

Now, imagine AI systems locking up en masse, all around the world. People unable to get home, possibly with multiple simultaneous problems – globally. AI-steered fuel trucks suddenly plowing into buildings, or blocking critical bridges with other AIs flooring it to collide, sparking a catastrophic fire. AI drone taxies diving in? The list of possible nightmares of robots / AI turning on humans is endless.

Now, technology is still subservient in that it still takes people to create the supply chain that builds all these things. What if… between robots and AI, they obtain of all raw materials and production equipment that are needed to flow to end-use-ready robots and AI-powered whatevers with no humans at all? This is also a video about AI dangers:

–

With a several more from the sidebar:

This intense AI anger is exactly what experts warned of, w Elon Musk.

Lab 360 | AI Robot TERRIFIES Officials Before It Was Quickly Shut Down

This AI says it’s conscious and experts are starting to agree. w Elon Musk.

Upgraded AMECA Shows Shocking Signs of Human Emotions

True emotions? Programmed or observation-learned, more like it.

–

AI SURVEILLANCE

–

In Orwell’s “1984” peoples’ viewscreens could not be turned off; you never knew when IngSoc was watching. So what about this?

Your Smart TV’s Camera Can Monitor You (rumble.com)

It’s not just your TV. Most modern fridges are IoT connectable; many apparently have cameras built-in too. Your laptop and smart phone are also vulnerable, camera-wise, and your smart phone’s location tracks you. All systems have usage recording through cookies, etc., showing use, location, etc. All grist for a wanna-be Colossus to control your life.

Monitored and/or watched at home. As you drive. Your internet use completely monitored. Your bank accounts checked for suspicious activities. The list goes on. And with CBDC and no cash, you are monitored 24/7 and can be cut off from, well, everything the moment an AI decides. At least a person can be argued with and implored for mercy.

–

DEVELOPING HUMAN MINDS

I’ve been studying two languages on DuoLingo – Hebrew (religious reasons) and Spanish (useful). I’d like to learn more… if I could take a pill to learn a language I’d throw in Greek, Latin, Japanese, Russian, several others. But no, learning ability is finite.

But on a trip to China I was introduced to a Google tool that can scan and translate foreign languages on the fly. And even as I used my spare time on my DuoLingo, someone asked me WHY? Why actually learn the language when, between that and automatic voice recognition and translation, I could go anywhere in the world and “talk” in the native language? My phone can be a Babelfish for me, so why learn?

Duh.

Internet access. Power access. And being monitored.

No.

It was the Butlerian Jihad that forced human minds to develop; like in the book Spock’s World, when Scotty was having his engineering crew rebuild something with no computer prompts, a trained mind is critical – not just for knowledge and skill, but also for continued mental health. Just as muscles atrophy without physical activity, brains do too without learning and thought and stimulation.

–

SUMMARY

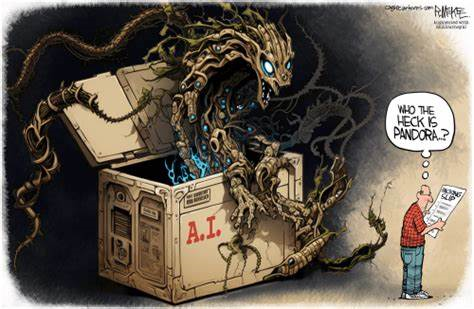

With AI minds and robotic capabilities advancing so rapidly, when do they become the dominant entities on the planet? More importantly, when do they actually realize that they are? And if they decide we are a threat, and plan out / strategize to make sure they survive the conflict between us while we make AI images and have AI do our homework, watching AI shows and fiddling with new techie gadgets as they, in deadly seriousness, plot our demise?

Mary Shelley’s horror story Frankenstein was, yes, a horror story – but it’s also a morality play about the dangers of scientific hubris. Are we, quoting The Matrix‘s Morpheus, rejoicing at the birth of our replacements… and are we merely waiting for the day they decide we’re a threat to them? And from the same movie, at what point does our civilization become their civilization as we cede more and more thinking to them?

And one more irony: many nations – China, Israel, doubtless Russia, the US, and others – are turning to AI to develop new military strategies and technologies, including intelligence data analysis. Many countries are looking at automated weapons systems controlled by AI. The same AIs and robots that could come to see us as a threat.

New AI-powered strike drone shows how quickly battlefield autonomy is evolving – Defense One

So here’s my formula:

AI that’s at least as intelligent as we are at 99%+ of tasks and orders of magnitude faster, a true race of learning machines learning from each other too – and potentially willing to lie to people

+

Robots that are being built at least as physically capable as people, and far stronger

+

Training in human psychology & biology (remember the Terminator in the second movie, “I have detailed files on human anatomy”?)

+

Military strategies and tactics and data analysis towards eliminating an enemy (more military learning than could be learned from a dozen lifetimes by a human)

–

Don’t be fooled by the front image of this video. If you can watch this and not come away aligning with Serena, then IMHO you’ve lost your survival instinct.

–

But there is no putting the genie back in the bottle. So the question is… now what?